identify verify code image

machine learnning - identification verify code image - [opencv to identify string, machine learning to identify what string]

background

from the last year i still want to traning a pretty good model to identify the verify code image, we can see the currently we can found the code from website or from github, most way they use the fixture size and fixture string number to trainning model. this way i think not pretty, i want to create model to identify the verify code from any size image any numbers string.

main framework

step1

trainning the model to identify code string at where in image, here we can use the opencv to create CascadeClass model, we can use the main String image and some nose image to trainning the model, it is easy.

step2

we know the image no possible only has the string image, the image must have nose line or point. the first we should to remove these noise and interference point, here we can use opencv function: opcv.open algorithm like this

def verification_code_recognition(image):

"""验证码识别"""

# 二值化图像

gray = cv.cvtColor(image, cv.COLOR_BGR2GRAY) # 转灰度图

ret, binary = cv.threshold(gray, 0, 255, cv.THRESH_BINARY_INV | cv.THRESH_OTSU) # 二值化

cv.imshow("binary", binary)

# 形态学操作

kernel = cv.getStructuringElement(cv.MORPH_RECT, (4, 4))

bin_af = cv.morphologyEx(binary, cv.MORPH_OPEN, kernel=kernel)

cv.imshow("bin_af", bin_af)

textImage = Image.fromarray(bin_af)

text = tess.image_to_string(textImage)

print("The result:", text)

step4

we can throug the model that befor we trained cascadeclass model to select where the string at. and copy that select to trainning the identify string model.

finish

I think this will be pretty good, I will try later, Continually updated……

talk is cheep, let me show you code

generate image to trainning yolov5 model to detect capcha string

# -*- coding:utf-8 -*

import os.path

import cv2

import numpy as np

import string

from random import randint, choice

from captcha.image import ImageCaptcha

from matplotlib import pyplot as plt

class GenYoloV5Img:

def __init__(self, img_save_dir='./images', label_save_dir='./labels', save_name='1'):

self.char_num = 1

self.characters = string.digits + string.ascii_uppercase + string.ascii_lowercase

self.img_save_dir = img_save_dir

self.label_save_dir = label_save_dir

self.classes = ['captcha_string']

self.save_name = str(save_name)

self._create_classes_txt()

def _create_classes_txt(self):

classes_txt_path = os.path.join(os.path.abspath(self.label_save_dir), 'classes.txt')

if not os.path.exists(classes_txt_path):

with open(classes_txt_path, 'w') as f:

for i in self.classes:

f.write(i)

f.write('\n')

f.close()

@staticmethod

def detect_boundary(x: np.array):

boundary_dic = {}

for index, value in enumerate(x):

if value > 0:

boundary_dic.update({'start': index-1})

break

for index, value in enumerate(x[::-1]):

if value > 0:

boundary_dic.update({'end': len(x) - index - 1})

break

print(boundary_dic)

return max(boundary_dic['start'], 0), max(boundary_dic['end'], 0)

def gen_cap_img(self):

im = ImageCaptcha(randint(50, 150), randint(50, 250))

img = im.create_captcha_image(chars=choice(self.characters), color=self.rgb_random(),

background='white')

img.save('test.png')

# plt.figure(figsize=(3, 3))

# plt.imshow(img)

# plt.show()

# plt.pause(0.1)

img_np = cv2.imread('test.png')

gray = cv2.cvtColor(img_np, cv2.COLOR_BGR2GRAY) # 二值化

ret, img_np = cv2.threshold(gray, 0, 255, cv2.THRESH_BINARY_INV | cv2.THRESH_OTSU) # 二值化

# cv2.imshow("binary", binary)

sum_x = img_np.sum(axis=0)

sum_y = img_np.sum(axis=1)

x_start, x_end = self.detect_boundary(sum_x)

y_start, y_end = self.detect_boundary(sum_y)

# for row in range(x_start, x_end + 1, x_end - x_start):

# for columns in range(y_start, y_end + 1, y_end - y_start):

# img_np[columns, row] = 255

self.create_yolo_label_txt(x_start, x_end, y_start, y_end, img_np.shape[1], img_np.shape[0])

# plt.imshow(img_np)

# plt.show()

# plt.pause(0.1)

img = im.create_noise_dots(image=img, color=self.rgb_random(), width=randint(0, 5),

number=randint(0, 80))

img = im.create_noise_curve(image=img, color=self.rgb_random())

img_save_path = os.path.join(os.path.abspath(self.img_save_dir), f'{self.save_name}.png')

img.save(img_save_path)

# plt.imshow(img)

# plt.show()

# plt.pause(0.1)

@staticmethod

def rgb_random():

rgb_random = (randint(0, 255),

randint(0, 255),

randint(0, 255))

return rgb_random

def create_yolo_label_txt(self, x_start, x_end, y_start, y_end, width, height):

center_point_x = (x_start + (x_end - x_start) / 2) / width

center_point_y = (y_start + (y_end - y_start) / 2) / height

string_width_rate = (x_end - x_start) / width

string_height_rate = (y_end - y_start) / height

label_save_path = os.path.join(os.path.abspath(self.label_save_dir), f'{self.save_name}.txt')

with open(label_save_path, 'w') as f:

f.write(f'0 {center_point_x} {center_point_y} {string_width_rate} {string_height_rate}')

f.close()

if __name__ == '__main__':

te = GenYoloV5Img(img_save_dir='captchas/images/train22424',

label_save_dir='captchas/labels/train22424',

save_name='1')

# plt.ion()

for i in range(3000):

te.save_name = i

te.gen_cap_img()

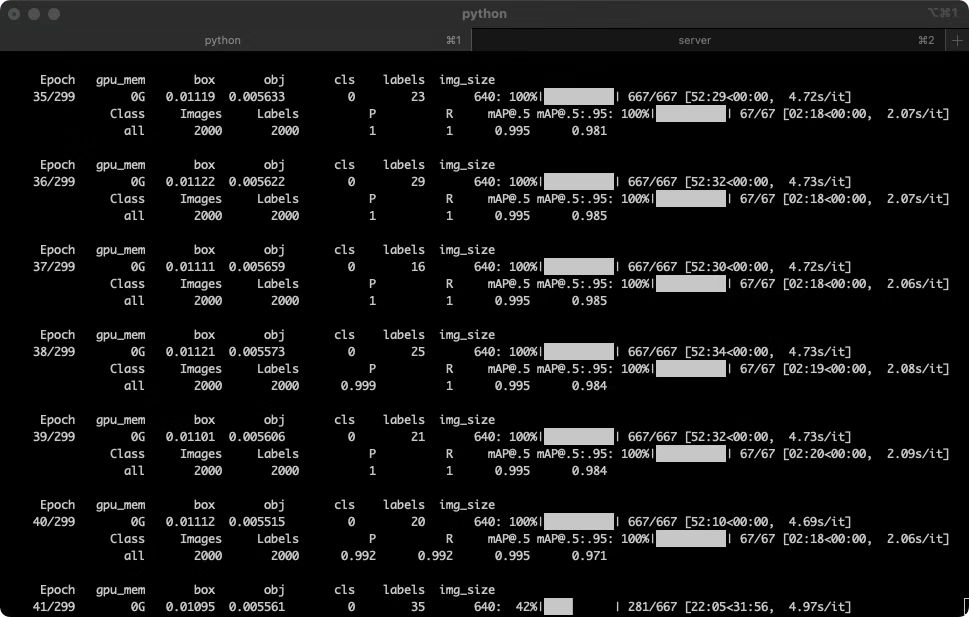

ok, let's start training

def parse_opt(known=False):

parser = argparse.ArgumentParser()

parser.add_argument('--weights', type=str, default=ROOT / 'yolov5s.pt', help='initial weights path')

parser.add_argument('--cfg', type=str, default='', help='model.yaml path')

parser.add_argument('--data', type=str, default=ROOT / 'data/captcha_string.yaml', help='dataset.yaml path')

parser.add_argument('--hyp', type=str, default=ROOT / 'data/hyps/hyp.scratch-low.yaml', help='hyperparameters path')

parser.add_argument('--epochs', type=int, default=300)

parser.add_argument('--batch-size', type=int, default=5, help='total batch size for all GPUs, -1 for autobatch')

parser.add_argument('--imgsz', '--img', '--img-size', type=int, default=640, help='train, val image size (pixels)')

parser.add_argument('--rect', action='store_true', help='rectangular training')

parser.add_argument('--resume', nargs='?', const=True, default=False, help='resume most recent training')

parser.add_argument('--nosave', action='store_true', help='only save final checkpoint')

parser.add_argument('--noval', action='store_true', help='only validate final epoch')

parser.add_argument('--noautoanchor', action='store_true', help='disable AutoAnchor')

parser.add_argument('--evolve', type=int, nargs='?', const=300, help='evolve hyperparameters for x generations')

parser.add_argument('--bucket', type=str, default='', help='gsutil bucket')

parser.add_argument('--cache', type=str, nargs='?', const='ram', help='--cache images in "ram" (default) or "disk"')

parser.add_argument('--image-weights', action='store_true', help='use weighted image selection for training')

parser.add_argument('--device', default='cpu', help='cuda device, i.e. 0 or 0,1,2,3 or cpu')

parser.add_argument('--multi-scale', action='store_true', help='vary img-size +/- 50%%')

parser.add_argument('--single-cls', action='store_true', help='train multi-class data as single-class')

parser.add_argument('--optimizer', type=str, choices=['SGD', 'Adam', 'AdamW'], default='SGD', help='optimizer')

parser.add_argument('--sync-bn', action='store_true', help='use SyncBatchNorm, only available in DDP mode')

parser.add_argument('--workers', type=int, default=2, help='max dataloader workers (per RANK in DDP mode)')

parser.add_argument('--project', default=ROOT / 'runs/train', help='save to project/name')

parser.add_argument('--name', default='exp', help='save to project/name')

parser.add_argument('--exist-ok', action='store_true', help='existing project/name ok, do not increment')

parser.add_argument('--quad', action='store_true', help='quad dataloader')

parser.add_argument('--cos-lr', action='store_true', help='cosine LR scheduler')

parser.add_argument('--label-smoothing', type=float, default=0.0, help='Label smoothing epsilon')

parser.add_argument('--patience', type=int, default=100, help='EarlyStopping patience (epochs without improvement)')

parser.add_argument('--freeze', nargs='+', type=int, default=[0], help='Freeze layers: backbone=10, first3=0 1 2')

parser.add_argument('--save-period', type=int, default=-1, help='Save checkpoint every x epochs (disabled if < 1)')

parser.add_argument('--local_rank', type=int, default=-1, help='DDP parameter, do not modify')

# Weights & Biases arguments

parser.add_argument('--entity', default=None, help='W&B: Entity')

parser.add_argument('--upload_dataset', nargs='?', const=True, default=False, help='W&B: Upload data, "val" option')

parser.add_argument('--bbox_interval', type=int, default=-1, help='W&B: Set bounding-box image logging interval')

parser.add_argument('--artifact_alias', type=str, default='latest', help='W&B: Version of dataset artifact to use')

opt = parser.parse_known_args()[0] if known else parser.parse_args()

the trainning start from two days ago, look like the process will finish after ten days, so ,let's watting the model performance.

i use the apple M1 chip, have to say it's too slowlly!!!

评论